Vatsal Shah

Co-Founder + CEO

This article, provided as a contribution to Forbes, features original content from Forbes by Vatsal Shah, CEO and Co-founder at Litmus.

2025 is the year of Enterprise Transformation - and Scale is the no. 1 challenge that is top of mind for today's manufacturing leaders.

The complexities surrounding the use of industrial data in enterprise decision-making processes have long been recognized. If leveraging industrial data were straightforward, businesses would have capitalized on its potential decades ago. However, the crux of the challenge lies in the legacy Operational Technology (OT) systems, which were not designed with the foresight of aligning with the rapidly evolving Information Technology (IT) landscape and its burgeoning innovations, be it in connectivity, the advent of artificial intelligence (AI), or the broader recognition of data as a vital asset. These legacy systems present a formidable barrier to integration and utilization of industrial data, marking a critical misalignment with modern IT practices and needs.

Talk to an expert to see how you can solve the challenges of scaling your data operations.

A big problem with many horizontal solutions is how they split up the steps of managing and using industrial data, and it starts right from the time of just starting to collect the data. Each step in the process of working with data might use a different tool. For example, to collect data from many places, you need a special tool just for that, and it might not work well with the tools that are used later to look at, understand, or keep the data safe. This makes it hard to move data from where it starts to being something you can really use. The end result - data gets trapped in one place, becomes mixed up, or gets completely lost.

Using so many different tools to handle data makes everything more complicated. This can slow down how quickly and well companies can use their industrial data in the cloud, AI systems, or other enterprise-level applications.

Also, when you have all these split-up solutions, it's hard to make a place where everything works together well, which you need to be able to use AI or other smart ways of looking and using the data. If the part where you collect data is separate from the part where you use it, companies can't see the big picture or change things quickly. This means they can't make smart choices fast or come up with new ideas with agility. The disjointed nature of such solutions starkly highlights their inadequacy in addressing the finely tuned requirements of operational technology, leaving businesses caught in a web of incompatible systems and bottlenecked by the very tools supposed to drive their digital transformation.

Check out this comprehensive buyers guide on Industrial DataOps.

Today there is a greater need for real-time data processing and intelligence that can be deployed directly at the source of data generation—right at the edge of the network.

The rise of intelligence at the edge is closely tied to the advancements in AI, particularly the development of small language models which can be embedded directly into operational processes. These models bring sophisticated data analysis and decision-making capabilities closer to the physical locations where data originates. By processing data locally, these systems can perform tasks such as predictive maintenance, quality control, and operational optimization almost instantaneously, reducing the latency that can be prevalent with cloud-only solutions.

However, to leverage the full potential of intelligence at the edge, there's a need for platforms that can rapidly integrate the latest technologies without requiring continuous investments in new software. This is where modern deployment technologies such as containerization and orchestration tools like Kubernetes come into play. These technologies facilitate the quick roll-out of updates and new features across distributed environments, enabling businesses to stay at the cutting edge of innovation without significant additional expenditures.

Just a few years ago, concepts like Kubernetes and Container technology were considered advanced and perhaps optional. Today, they have become indispensable for any industrial operation serious about scaling efficiently and maintaining competitive advantage. These tools offer unparalleled flexibility, allowing organizations to scale operations seamlessly across various environments – from edge, to private clouds, to public clouds – depending on their needs and regulatory demands.

For enterprise leaders looking to future-proof their operations, adopting an edge computing strategy enriched with the latest container technology and supported by robust platforms is not just a strategic move—it's a critical necessity to keep pace with the rapid evolution of industrial technologies and market requirements.

Along with technology agility, addressing the complexity and bespoke needs of industrial settings necessitates the adoption of open architectures and the cultivation of strong partner ecosystems. Open, modular architectures allow for the seamless integration of Industrial DataOps practices with existing systems, ensuring that businesses can enhance their data capabilities without disrupting ongoing operations. Furthermore, partner ecosystems play a crucial role in providing the specialized knowledge and solutions tailored to unique industry requirements. These relationships help in abstracting the system's complexities, allowing enterprises to focus on scaling operations and driving innovation. Tools and platforms that were previously considered indispensable become replaceable as more adaptable and integrative options emerge.

Check out this playbook to scale AI in manufacturing.

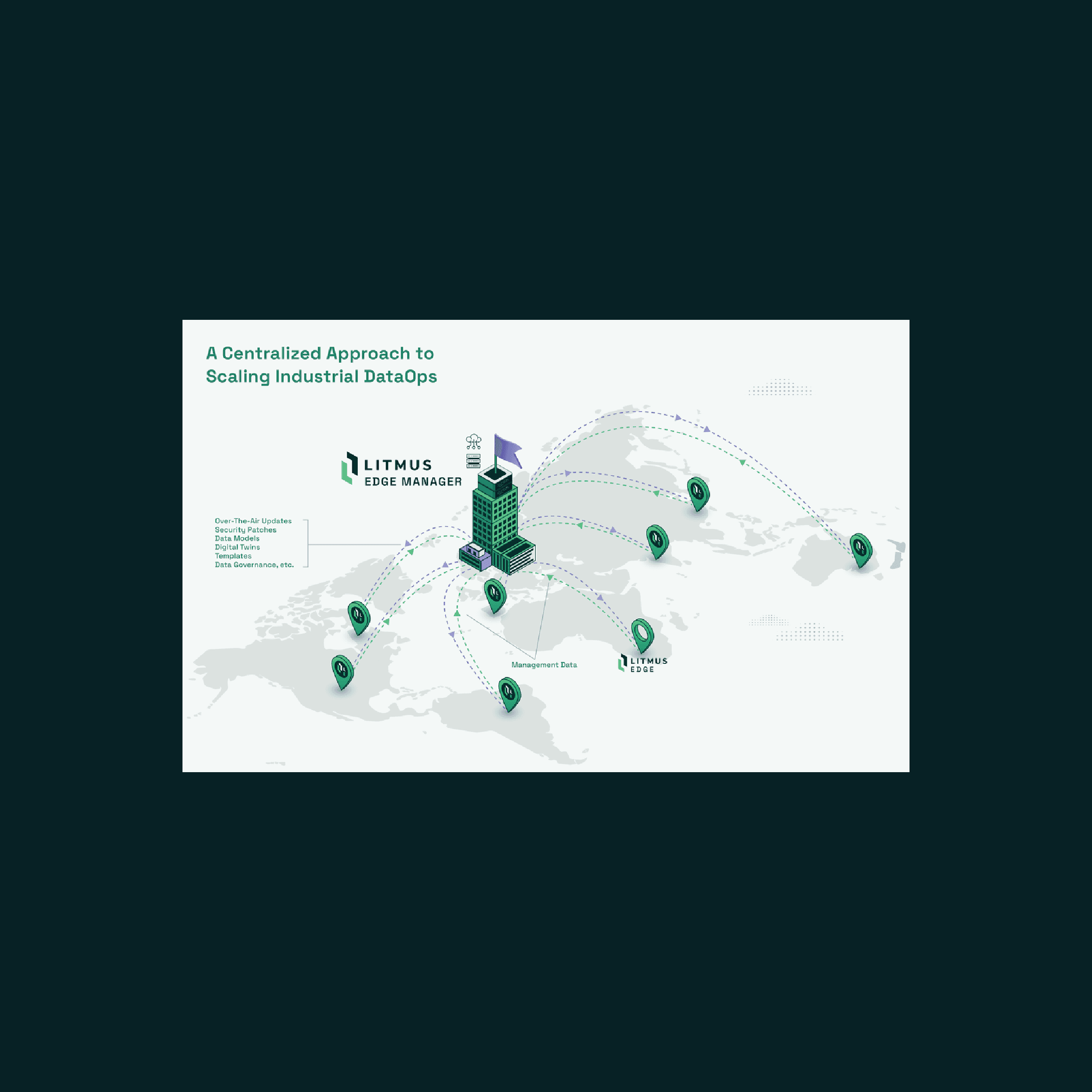

In this context, a holistic approach becomes imperative—a solution that enables a direct and simple pathway to harnessing industrial data at an enterprise scale is essential. This means a platform capable of managing the entire spectrum of DataOps needs: from data collection, standardization, and processing, to modeling, analysis, and seamless integration. The intricate intertwining of these processes is such that attempting to stitch disparate solutions from multiple horizontal providers will only hinder the pace of enterprise transformation. Opting for a piecemeal approach in an era that demands swift and coherent adaptation is no longer viable. The urgency of NOW is evident, urging leaders to decisively pivot towards AI and other technologies that enhance the real value of Industrial DataOps, opposed to persistently attempting to retrofit an outdated tech stack to meet new-age demands.

Indeed, the choice is unequivocal. Industrial leaders are increasingly recognizing the necessity of embracing a unified, streamlined approach to DataOps, one that guarantees faster time to market and bridges the longstanding gap between OT and modern IT innovations. This transition signifies a broader shift in mindset, moving beyond the limitations of horizontal solutions to adopt platforms that are inherently designed to facilitate the seamless integration and utilization of industrial data at scale.

Boost your Enterprise Transformation today with Litmus. Get Started today.

Suranjeeta Choudhury

Director Product Marketing and Industry Relations

Suranjeeta Choudhury heads Product Marketing and Analysts Relations at Litmus.

Vatsal Shah

Co-Founder + CEO

This article, provided as a contribution to Forbes, features original content from Forbes by Vatsal Shah, CEO and Co-founder at Litmus.

Suranjeeta Choudhury

Director Product Marketing and Industry Relations

This article aims to guide manufacturers on scaling AI use cases effectively. As the industry stands at a technological evolution's brink, understanding how to deploy and expand these technologies is crucial.

Suranjeeta Choudhury

Director Product Marketing and Industry Relations

In the industrial sector, the advent of AI technology heralds a transformative potential like the most significant technological milestones in history. But there's an essential lesson to be drawn from the journey of Industry 4.0.