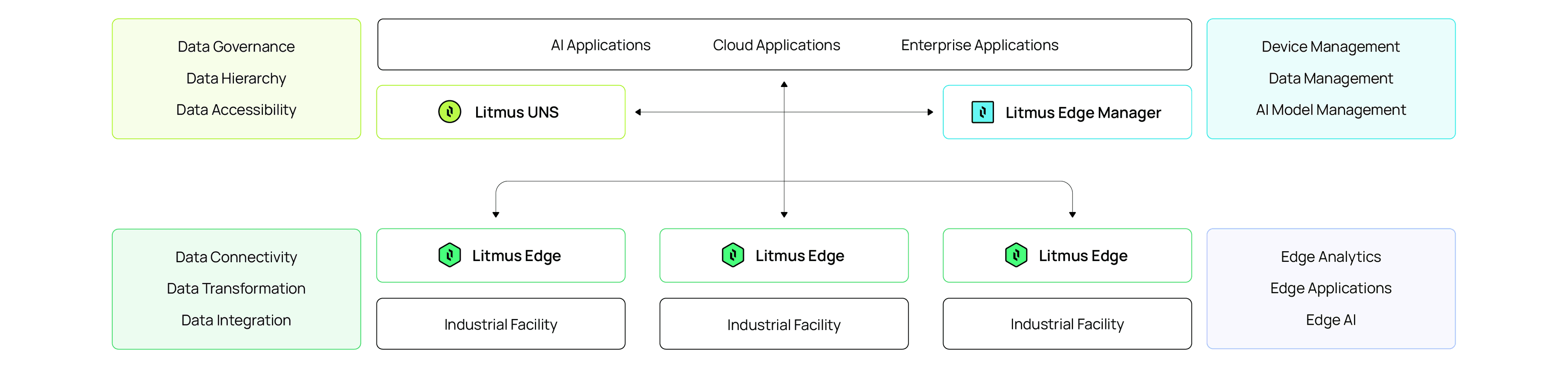

As a modern Edge Data Platform, Litmus unleashes the potential of OT data, powering Industrial AI with consistency, security, and speed. Deploy across all facilities to enable a unified data flow between OT systems and AI applications—removing barriers to adoption and scaling AI seamlessly.

Litmus embeds AI where it matters most—at the edge.

MCP Server orchestrates AI workloads so models can be deployed, updated, and executed instantly at the edge. Litmus delivers real-time reasoning and analytics exactly where decisions are made, while integrating with major cloud AI services to combine local intelligence with large-scale cloud models.

Optimized support for Nvidia GPU drivers enables local runtime of Computer Vision, AI models, and other GPU-intensive AI applications right at the edge. Edge AI runtime removes the need to send data to the cloud for localized AI use cases.

Locally hosted SLMs within Litmus Edge enable dozens of use cases designed around asset knowledge and troubleshooting, as well as process optimization and improvements. Running SLMs at the edge keeps sensitive data secure in air-gapped environments.

Litmus, together with MCP Server, provides structured Data & Metadata APIs that give AI agents the grounding they need—historical context, asset models, and process intelligence. This combination empowers agents to go beyond generic recommendations and deliver accurate, context-rich guidance operators can trust.