A guide to connect LEM with your Lenses Kafka Monitoring.

DataOps

Big Data, fast data, IoT data, small data. The usage of Data is growing as organizations are transitioning to become data-driven. Data analytics and integrations are increasingly critical as data needs to be shared and made available to the whole enterprise.

Dev teams, organised around DevOps principles, are able to deliver high quality applications to production via automated and repeatable methods.

DataOps builds on these lessons to allow data engineers as well as any data literate user to

build and operate data streams and deploy quickly.

DataOps follows, at its core, the same principles as DevOps:

Data engineering has and will continue to be an important role in any organisation.

Regardless if they already have a large data engineering capacity for ongoing integration, data marts and data

warehouses or are just starting out.

However, this requires confidence in a stable and secure environment to ensure that data engineers and users can maximize the value creation for all stakeholders.

Confidence with Apache Kafka depends on engineers having unified visibility into all real-time data & applications. Or they will risk missing operation critical information as infinite failure modes can impact the performance & availability of a Kafka streaming app.

Monitoring once Kafka infrastructure by looking into applications like Replica Fetcher Threads and Disk Write wait times won’t save anybody if there’s schema drift, incorrect ACLs, maxed-out Quotas or poor partitioning.

Apache Kafka is a complex a black box, requiring monitoring for many services including Schema Registry, Kafka Connect and real-time flows.

And organizations utilizing Apache Kafka, which can also include Litmus Edge Manager to steam all your Litmus edge process data and events, always strife to answer this one simple question

Are my real-time data platform, streaming applications and data healthy?

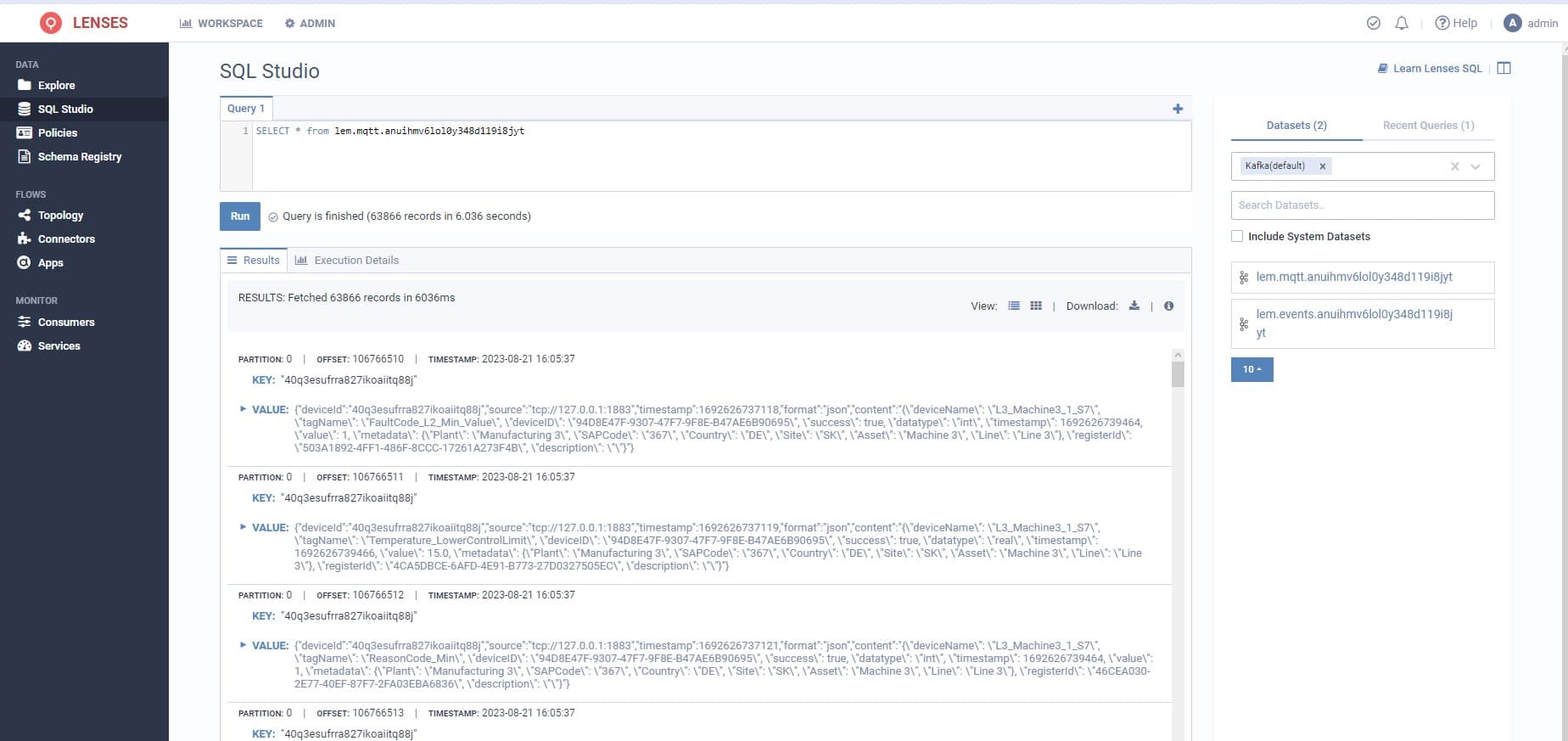

This guide will show how easy it is possible to connect Litmus Edge Manager Kafka Broker Integration with Lenses to allow to add Litmus Edge Manager into your organizations monitoring of your Kafka infrastructure & application performance from a single role-based and secured Kafka UI.

And spinning up full-service visibility helps to stay on top of all your Litmus Edge data and app health making use of these data.

Further allows it to receive notifications in case of alerts for example for: